GenAI startup has launched MirageLSD, an AI video model that transforms live video feeds on the fly. The system is meant to solve two big problems with earlier AI video tools: slow rendering and rapid image quality loss over time.

AI video models are often slow and typically only manage to generate short, five- to ten-second clips before the visuals start to degrade. MirageLSD takes a different approach. Instead of generating entire video sequences at once, the model creates each frame individually.

The system uses a window of recent frames, the current video input, and the user’s prompt to predict the next frame as the stream unfolds. Each new frame is immediately fed back into the next calculation step, so the model can react instantly to changes in the live feed. This setup enables continuous, real-time video transformation at 20 frames per second and 768 x 432 resolution, keeping latency low enough for interactive applications.

To keep video quality stable during longer sessions, Decart uses two training techniques. The first, called “diffusion forcing,” adds noise to each frame individually, teaching the model to clean up images without relying on earlier frames. This helps prevent errors from building up over time.

The second method, “history augmentation,” exposes the model to distorted or faulty frames during training, so it learns to spot and correct recurring mistakes instead of simply passing them along.

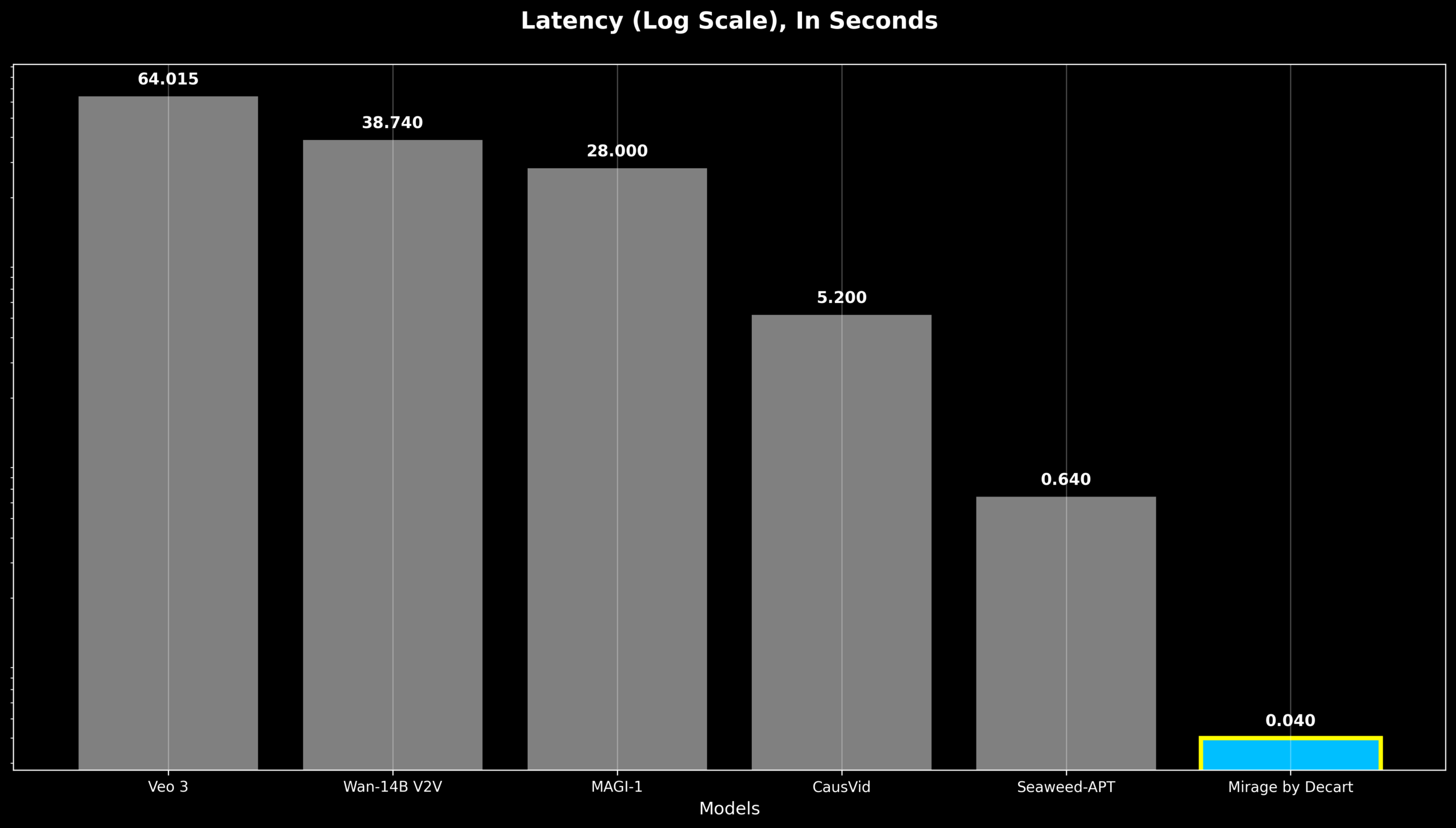

Decart has tuned MirageLSD specifically for Nvidia Hopper GPUs, using “architecture-aware pruning” to trim away less important parts of the model and boost both speed and efficiency. The team also applies “shortcut distillation,” training smaller models to replicate the results of larger ones—a process they say leads to a 16x performance boost. As a result, each frame is processed in under 40 milliseconds, keeping latency low enough that most viewers won’t notice major lag.

MirageLSD does have some limitations. It currently processes only a small window of previous frames, so consistency can still decrease during longer videos. The model also struggles with major style changes and precise control over individual objects.

Mirage Platform Goes Live, With More Features on the Way

Decart has launched the Mirage platform alongside MirageLSD, with a web version already available and mobile apps for iOS and Android on the way. The platform targets livestreaming, video calls, and gaming. Decart plans regular updates throughout the summer, adding features like improved facial consistency, voice control, and more precise object control.

This is Decart’s second AI model, following their viral Minecraft project Oasis. Building MirageLSD took about six months. Other systems like StreamDiT can achieve similar speeds—up to 16 frames per second—and also offer interactive capabilities, but still lag behind top models like Google’s Veo 3 when it comes to image quality.