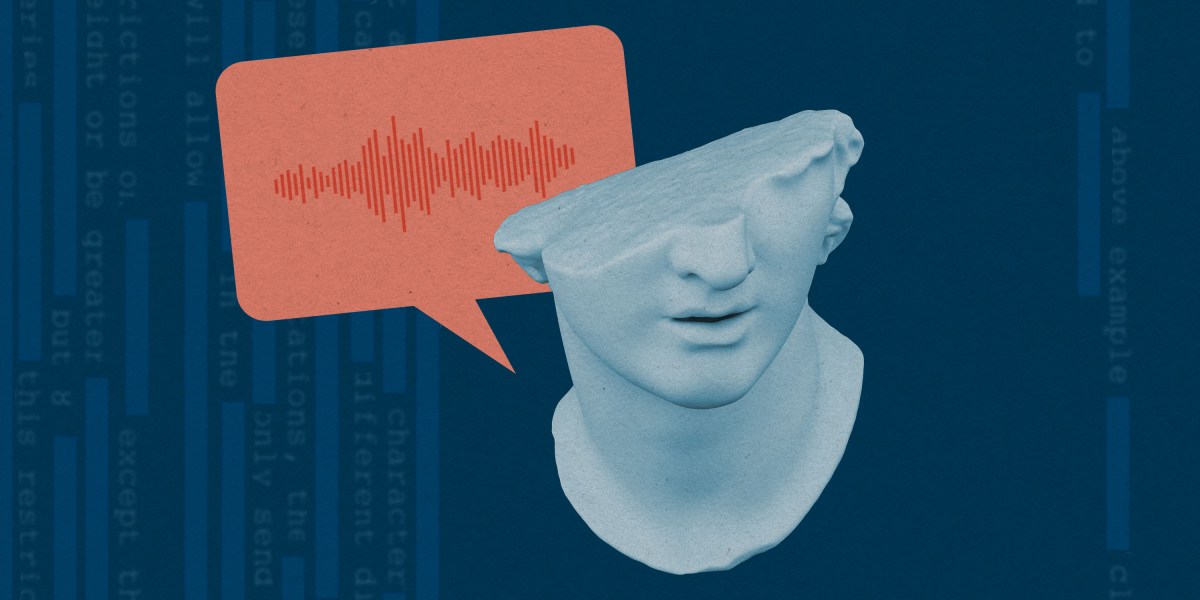

A technique known as “machine unlearning” could teach AI models to forget specific voices—an important step in stopping the rise of audio deepfakes, where someone’s voice is copied to carry out fraud or scams.

Recent advances in artificial intelligence have revolutionized the quality of text-to-speech technology so that people can convincingly re-create a piece of text in any voice, complete with natural speaking patterns and intonations, instead of having to settle for a robotic voice reading it out word by word. “Anyone’s voice can be reproduced or copied with just a few seconds of their voice,” says Jong Hwan Ko, a professor at Sungkyunkwan University in Korea and the coauthor of a new paper that demonstrates one of the first applications of machine unlearning to speech generation.

Copied voices have been used in scams, disinformation, and harassment. Ko, who researches audio processing, and his collaborators wanted to prevent this kind of identity fraud. “People are starting to demand ways to opt out of the unknown generation of their voices without consent,” he says.

AI companies generally keep a tight grip on their models to discourage misuse. For example, if you ask ChatGPT to give you someone’s phone number or instructions for doing something illegal, it will likely just tell you it cannot help. However, as many examples over time have shown, clever prompt engineering or model fine-tuning can sometimes get these models to say things they otherwise wouldn’t. The unwanted information may still be hiding somewhere inside the model so that it can be accessed with the right techniques.

At present, companies tend to deal with this issue by applying guardrails; the idea is to check whether the prompts or the AI’s responses contain disallowed material. Machine unlearning instead asks whether an AI can be made to forget a piece of information that the company doesn’t want it to know. The technique takes a leaky model and the specific training data to be redacted and uses them to create a new model—essentially, a version of the original that never learned that piece of data. While machine unlearning has ties to older techniques in AI research, it’s only in the past couple of years that it’s been applied to large language models.

Jinju Kim, a master’s student at Sungkyunkwan University who worked on the paper with Ko and others, sees guardrails as fences around the bad data put in place to keep people away from it. “You can’t get through the fence, but some people will still try to go under the fence or over the fence,” says Kim. But unlearning, she says, attempts to remove the bad data altogether, so there is nothing behind the fence at all.

The way current text-to-speech systems are designed complicates this a little more, though. These so-called “zero-shot” models use examples of people’s speech to learn to re-create any voice, including those not in the training set—with enough data, it can be a good mimic when supplied with even a small sample of someone’s voice. So “unlearning” means a model not only needs to “forget” voices it was trained on but also has to learn not to mimic specific voices it wasn’t trained on. All the while, it still needs to perform well for other voices.

To demonstrate how to get those results, Kim taught a recreation of VoiceBox, a speech generation model from Meta, that when it was prompted to produce a text sample in one of the voices to be redacted, it should instead respond with a random voice. To make these voices realistic, the model “teaches” itself using random voices of its own creation.

According to the team’s results, which are to be presented this week at the International Conference on Machine Learning, prompting the model to imitate a voice it has “unlearned” gives back a result that—according to state-of-the-art tools that measure voice similarity—mimics the forgotten voice more than 75% less effectively than the model did before. In practice, this makes the new voice unmistakably different. But the forgetfulness comes at a cost: The model is about 2.8% worse at mimicking permitted voices. While these percentages are a bit hard to interpret, the demo the researchers released online offers very convincing results, both for how well redacted speakers are forgotten and how well the rest are remembered. A sample from the demo is given below.

Ko says the unlearning process can take “several days,” depending on how many speakers the researchers want the model to forget. Their method also requires an audio clip about five minutes long for each speaker whose voice is to be forgotten.

In machine unlearning, pieces of data are often replaced with randomness so that they can’t be reverse-engineered back to the original. In this paper, the randomness for the forgotten speakers is very high—a sign, the authors claim, that they are truly forgotten by the model.

“I have seen people optimizing for randomness in other contexts,” says Vaidehi Patil, a PhD student at the University of North Carolina at Chapel Hill who researches machine unlearning. “This is one of the first works I’ve seen for speech.” Patil is organizing a machine unlearning workshop affiliated with the conference, and the voice unlearning research will also be presented there.

She points out that unlearning itself involves inherent trade-offs between efficiency and forgetfulness because the process can take time, and can degrade the usability of the final model. “There’s no free lunch. You have to compromise something,” she says.

Machine unlearning may still be at too early a stage for, say, Meta to introduce Ko and Kim’s methods into VoiceBox. But there is likely to be industry interest. Patil is researching unlearning for Google DeepMind this summer, and while Meta did not respond with a comment, it has hesitated for a long time to release VoiceBox to the wider public because it is so vulnerable to misuse.

The voice unlearning team seems optimistic that its work could someday get good enough for real-life deployment. “In real applications, we would need faster and more scalable solutions,” says Ko. “We are trying to find those.”